Digital Techniques for Utah History

By Nate Housley

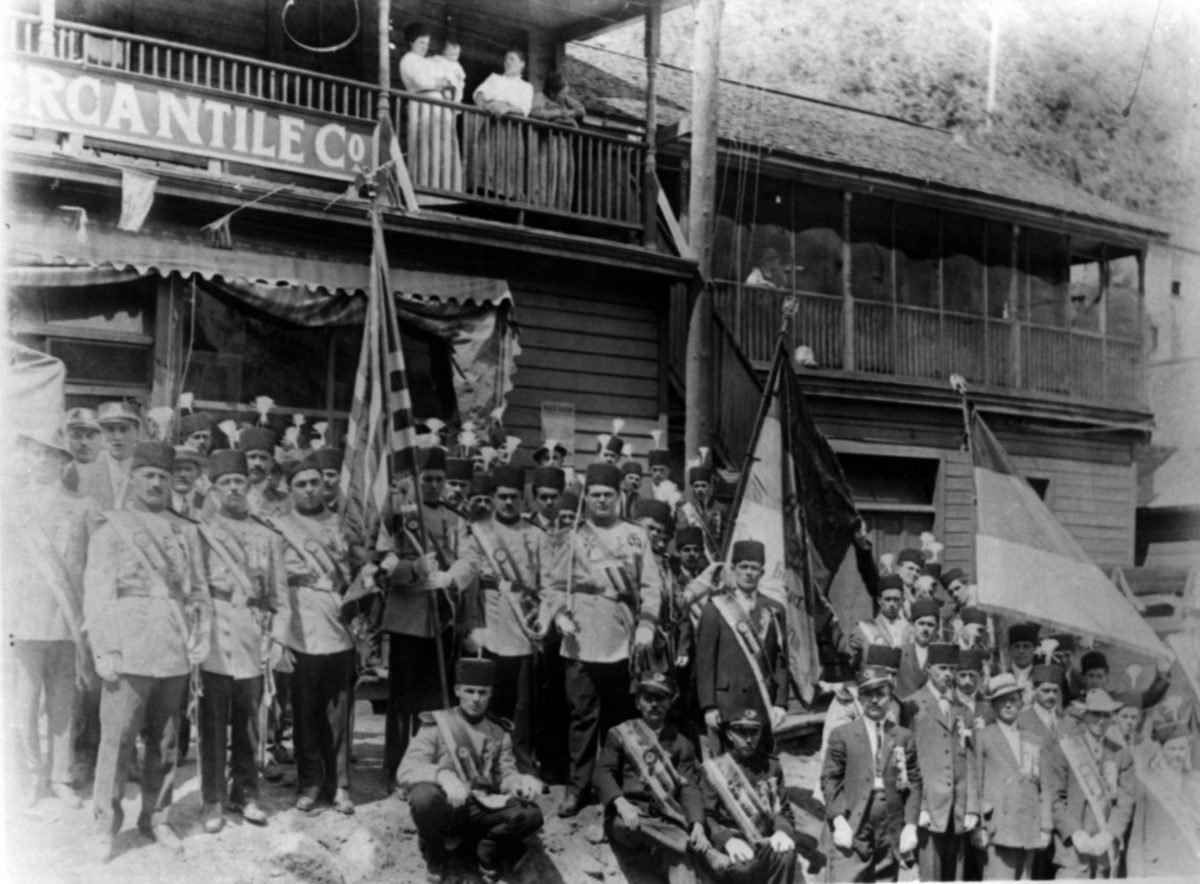

The Marriott Library at the University of Utah houses a number of oral history collections that can aid in interpreting Utah’s history. Several are available online to the general public, including the Carbon County Oral Histories. This collection highlights the experience of immigrants and their children from the early decades of the twentieth century, when railroads and mines in Utah sought cheap labor from abroad. The presence of non-Mormon immigrants and the coal mining industry made the area distinct enough that by 1894 Carbon County formed from neighboring Emery County, and the small Carbon County town of Helper became one of Utah’s most ethnically diverse. Utah’s Italian and Greek communities formed during this period, and immigrants from Yugoslavia, Finland, and Japan are also represented in the collection.

Because digital versions of these transcripts exist online, students of Utah history can apply digital humanities techniques to gain fresh perspectives. There is a lively discourse in the digital humanities about the concept of “distance reading,” much of which is germane to historical research. In contrast to the “close reading” that literary scholars might perform, distance reading involves using textual analysis to gain a kind of zoomed out perspective. Rather than automating the work of scholarship, this can create a new vantage point from which to interpret it.

Historians, perhaps playing against the type of the tweedy professor trawling a dusty archive, have been rather quick to embrace digital tools. The American Historical Association, with the help of George Mason University’s Center for History and New Media, launched its website in 1995. In 1999, Cambridge University Press published its CD-ROM set of the Trans-Atlantic Slave Trade Database, which has since become a cornerstone in contemporary understanding of Trans-Atlantic slavery. Digitized archival material and the internet continue to provide historians with unprecedented access to the past.

The 47 interviews in the Carbon County Oral Histories contain, cumulatively, around half a million words. Few would have the time or energy to read all of them. While the finding aids and subject headings can help a researcher to narrow down which interviews would be of the most interest, digital tools and practices exist to help us tease out connections and possibly raise questions for further study.

Perhaps the most user-friendly digital humanities tool is Voyant. The Voyant Tools website allows one to upload or link to a text corpus and begin poking around without any programming knowledge. Voyant will create a word cloud of the most common words in a corpus, provide contexts, and offer several other ways of peering into a text.

You can investigate the Carbon County Oral Histories for yourself here. Clicking the link will take you to a diagram of common words and their associations in the corpus. You can adjust the “context” slider to display more connections. By clicking on one of the words, you will go to the Voyant dashboard, where additional options are laid out.

I clicked “wine,” and found a strong correlation for that word in the corpus to “Americanization.” From a previous study of the oral histories of Italian immigrants to Carbon County, I found that food and drink was an important part of Italian culture. Additionally, the knowledge of wine-making that many of them brought over from Italy helped them (discreetly) form bonds with others outside the Italian community during the Prohibition years. (You can read more about my research here).

More advanced techniques are possible with open-source software, online documentation, and the patience to learn how to use them. The program R is popular with digital humanists for textual analysis, as it contains a plethora of powerful functions. The Programming Historian is a valuable resource for learning R, Python, and other languages and programs. After downloading R to my computer, I used a tutorial to find the most common terms used in each of the interviews. My exact code can be found at the bottom of this post.

The output looked like this (excerpt, emphasis added):

...

[5] "Art Jeanselme; aj; sheep; canyon; coal; pete"

[6] "Edna Burton; eb; edna; burton; finn; hiawatha"

[7] "Eldon Dorman; dorman; eldon; coal; sweets; jennings"

[8] "Elias Degn; coal; elias; jl; jesse; ton"

[9] "Emile Louise Cances; emile; bw; sunnyside; grandpa; daddy"

[10] "Emmanuel May; coal; hiawatha; gee; mechanic; sunnyside"

[11] "Fred Voll; fv; railroad; voll; coal; grande"

[12] "Gabriel Bruno; bruno; gabriel; glen; schooling; sicily"

[13] "George Poulas; gp; dk; celebrate; customs; easter"

[14] "Hal Schultz; hal; schultz; coal; airplane; flew"

[15] "Ivan and Edith Lambson; alger; edith; vee; ivan; elda"

[16] "James Gardner; jg; gardner; coal; huntington; cattle"

[17] "Joe Meyers and Walt Borla; jm; wb; myers; walt; borla"

[18] "Joseph Dalpaiz; jd; kt; mjd; helper; wages"

[19] "Kendall and Ona Barnett; ob; kendell; barnett; ona; coal"

[20] "Kosuye Okura; ko; okura; grandma; sake; laughter"

[21] "Leonard Shield; ls; coal; shield; leonard; hiawatha"

[22] "Lillie Erkkila Woolsey; lw; woolsey; finn; lillie; finnish"

[23] "Louis Pestotnick; uh; mines; moreland; hiawatha; coal"

[24] "Lucille Olsen; olsen; lucille; hiawatha; virg; blackhawk"

[25] "Marie Nelson; bw; anybody; huh; eighteen; sheep"

...

While some of the output is not very helpful (such as proper nouns and the word “uh”) there are some interesting insights. For example, a couple of the results include “sheep,” a topic that is not tagged on the Marriott Library website. Another mentions cattle. These records could be a great entry point into the ranching culture of immigrants in Utah during this period.

Becoming more comfortable with these sorts of research techniques can yield new pathways to interrogating the primary source records of the past, for academic historians and laypeople alike. Digital history in general, and distance reading in particular, are new methodological tools that historians will continue to utilize in order to deepen our understanding.

My R code:

# Install packages

install.packages("tidyverse")

install.packages("tokenizers")

# Load installed packages

library(tidyverse)

library(tokenizers)

# Load corpus and metadata file

input_loc <- "/path/to/folder"

setwd(input_loc)

metadata <- read_csv("metadata.csv")

files <- dir(input_loc, full.names = TRUE)

# Access table of word frequencies

base_url <- "https://programminghistorian.org/assets/basic-text-processing-in-r"

wf <- read_csv(sprintf("%s/%s", base_url, "word_freqency.csv"))

# Process document summaries

text <- c()

for (f in files) {

text <- c(text, paste(readLines(f), collapse = "\n"))

}

description <- c()

for (i in 1:length(words)) {

tab <- table(words[[i]])

tab <- data_frame(word = names(tab), count = as.numeric(tab))

tab <- arrange(tab, desc(count))

tab <- inner_join(tab, wf)

tab <- filter(tab, frequency < 0.002)

result <- c(metadata$Narrator[i], metadata$year[i], tab$word[1:5])

description <- c(description, paste(result, collapse = "; "))

}

descriptionWorks Cited:

Taylor Arnold and Lauren Tilton, “Basic Text Processing in R,” The Programming Historian 6 (2017), https://programminghistorian.org/en/lessons/basic-text-processing-in-r.

Daniel J. Cohen and Roy Rosenzweig, Digital History: A Guide to Gathering, Preserving, and Presenting the Past on the Web. Ebook. 2005. Accessed 2/25/2019. http://chnm.gmu.edu/digitalhistory/exploring/1.php